Breaking Down: ChatGPT

Want to know How a new Age AI like ChatGPT works? Checkout this blog to understand it in detail.

Introduction

The world nowadays is changing very rapidly. Keeping up with the pace of the changing world is hard. Recently, OpenAI introduced its prototype of a chat-based model called ChatGPT, which got viral. OpenAI is an AI Research and deployment company located in San Fransisco.

Have you ever wondered how ChatGPT works under the hood? But before that let us understand what is ChatGPT.

What is ChatGPT?

ChatGPT is an NLP (Natural Language Processing) Model that is built by OpenAI. It interacts with the user conversationally. The dialogue format in it makes it possible to answer follow-up questions, admit mistakes & learn from them and reject incorrect requests.

ChatGPT is built on a model called GPT (Generative Pretrained Transformers) 3.5 series, which was trained early in 2022. Currently, as we are using it, it is learning new things, identifying new patterns and improving itself. The more we use it, the smarter it becomes.

To understand ChatGPT, we have to first understand what is NLP and GPT.

Natural Language Processing (NLP)

NLP is a cross-section of linguistics and computer science. It is a subfield of AI that focuses on a computer that understands, interprets and generates human language. It is concerned with the interaction between a computer and a human being.

NLP is the core of ChatGPT.

As we know, the computer does not understand any languages like English that we speak or write in our day-to-day life. A computer only understands vectors and numbers. So, to process the sentence and letters we speak or write, to make the computer understand, the sentence goes through a 7 steps process.

Segmentation

The first step of the processing is segmentation. It splits the sentence into individual words and removes punctuations. Basically, it tokenizes the words. Let me explain what tokenize is, according to OpenAI tokens are the words used for Natural Language Processing. In simple words, 1 token is approximately 4 characters or 0.75 words.

For Example:-

| Index | Word |

| 0 | I |

| 1 | am |

| 2 | learning |

| 3 | ChatGPT |

| 4 | right |

| 5 | now |

Tokenization

The words that are tokenized, now go through the next step called tokenization. The words are converted to a standard format like lowercase.

For Example:-

| Index | World |

| 0 | i |

| 1 | am |

| 2 | learning |

| 3 | chatgpt |

| 4 | right |

| 5 | now |

Stop Words

The next step is Stop Words. It removes the words that do not contribute to the meaning of the sentence to reduce the data that is needed to be processed.

| Index | Word |

| 0 | i |

| 1 | learning |

| 2 | chatgpt |

Stemming

The 4th step is Stemming. In this step, the processing will be converting the words of the sentence into their base form or stemm to make the model's ability to generalize and recognize some patterns. In this step, some algorithms are applied which includes removing any suffix and the leave the word with its core meaning.

For Example:-

| Index | Word |

| 0 | i |

| 1 | learn |

| 2 | chatgpt |

Limitization

The next step is Limitization. This step is similar to Stemming. It reduces the words to their respective dictionary form.

Speech Tagging

The 6th step is Speech Tagging. This process is to mark the word or rather tag words to their respective English diction like nouns, verbs, adjectives etc.

For Example:-

// The machine understands vectors and numbers, so here the speech tagging is based on an 2D array which contains different tokens/words in a Binary form.

[

[0 0 0 1] # pronoun

[0 1 0 0] # auxilary verb

[0 0 1 0] # verb

[0 0 0 0] # proper noun

[1 0 0 0] # stop word

[0 1 0 1] # noun

[1 0 0 0] # stop word

]

Named Entity Recognition

The last step is called Named Entity Recognition. In this step, the machine tries to recognize the name of a certain or famous person, organization or location.

After these 7 steps, the NLP model gets the preprocessed data that is required to predict the next word or a sentence which more relevant/correct than the current one.

The perfect example of NLP is the Editor which is used in the Hashnode itself. It is using Grammarly to improve the sentences and make them more correct.

Now, let's understand GPT.

GPT (Generative Pretrained Transformers)

GPT was introduced by OpenAI in 2020. The current version of GPT is called GPT-3. GPT is a pre-trained model which can generate human-like text using algorithms. OpenAI has fed all the data to the model that was required to complete their task. The data is approx. 45TB of text information was gathered by crawling the internet (OpenAI used a publically available dataset called CommonCrawl) along with some text added by OpenAI and some text from Wikipedia.

GPT-3 is built on Transformers. The model is trained using Reinforcement Learning. Let me start by explaining what are transformers.

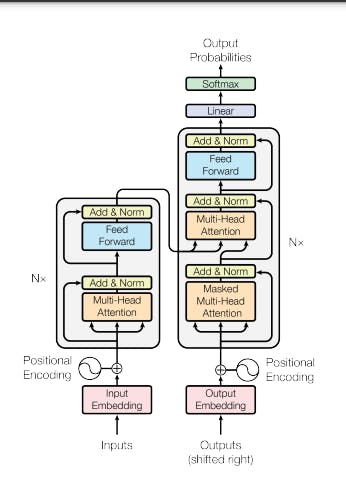

The Transformers contains two things inside it an encoder and a decoder.

An Encoder performs the 7 steps of Natural Language Processing. You can also call an encoder, an input. A Decoder uses a sequence-to-sequence transformation, i.e., it inputs a sequence of words that could be a question or a sentence and outputs another sequence like an answer to a question or a sentence.

Transformers are the new age Machine Learning technology that was introduced in 2017 in a paper called "Attention is All You Need".

Transformers work on a self-attention mechanism that focuses on the most relevant parts of input to get the desired output. The mechanism tries to make sense of the input or rather tries to understand the context of the input text. It calculates how much attention is needed on each vector/word based on other vectors/words. The transformers are so good because they understand the context.

Transformers allow the model to effectively process the input sequence of any length in a parallel and efficient manner. It vastly outperforms RNN (Recurrent Neural Networks) and CNN (Convolutional Neural Networks).

This is the base on which ChatGPT works. Now, let's talk about ChatGPT and discuss what OpenAI did differently with ChatGPT.

ChatGPT

With ChatGPT, OpenAI got a little creative and used a variety of different Machine Learning techniques to get the best results.

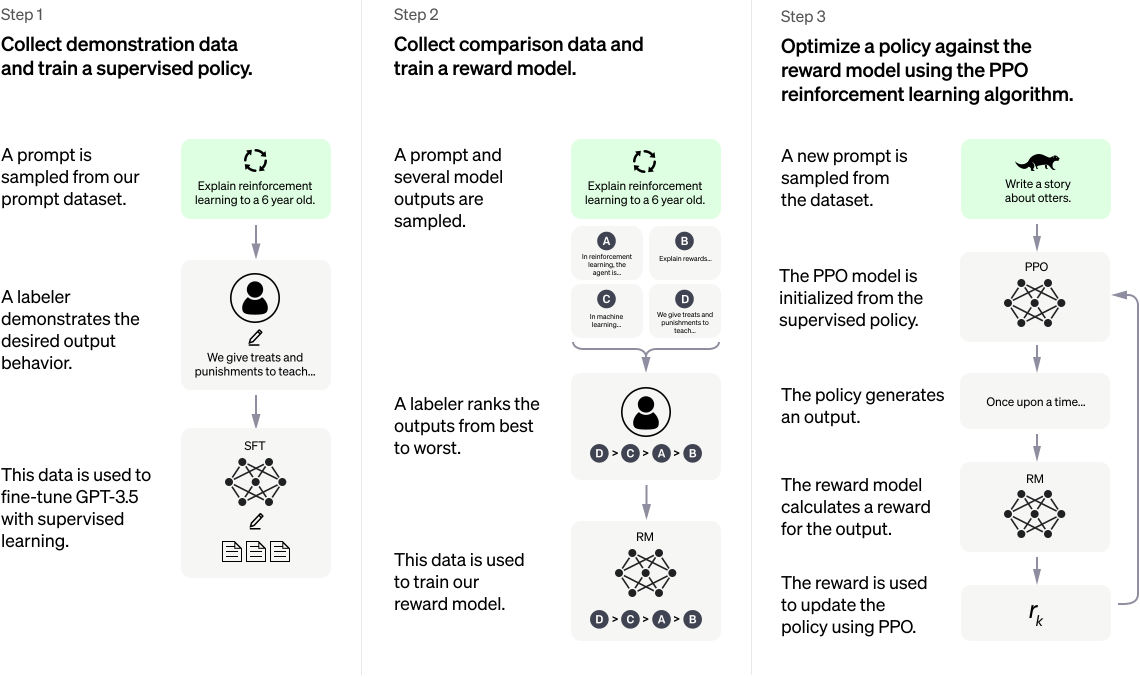

The initial model was trained with Supervised Fine Tuning. The model contained human AI which played a user as well as an AI assistant. Here, a lot of prompts were created, a user described a described input and the assistant tries to understand the input.

They also trained a Reward-based model with a similar process. A prompt with four options were passed and the labeler ranks the output from best to worst. This data was fed to the model and it tries to maximize the reward using Reinforcement Learning (A Reward-based model in which if an AI takes a correct step it gets a reward).

This is how ChatGPT was designed.

Thank you.